Tf-idf¶

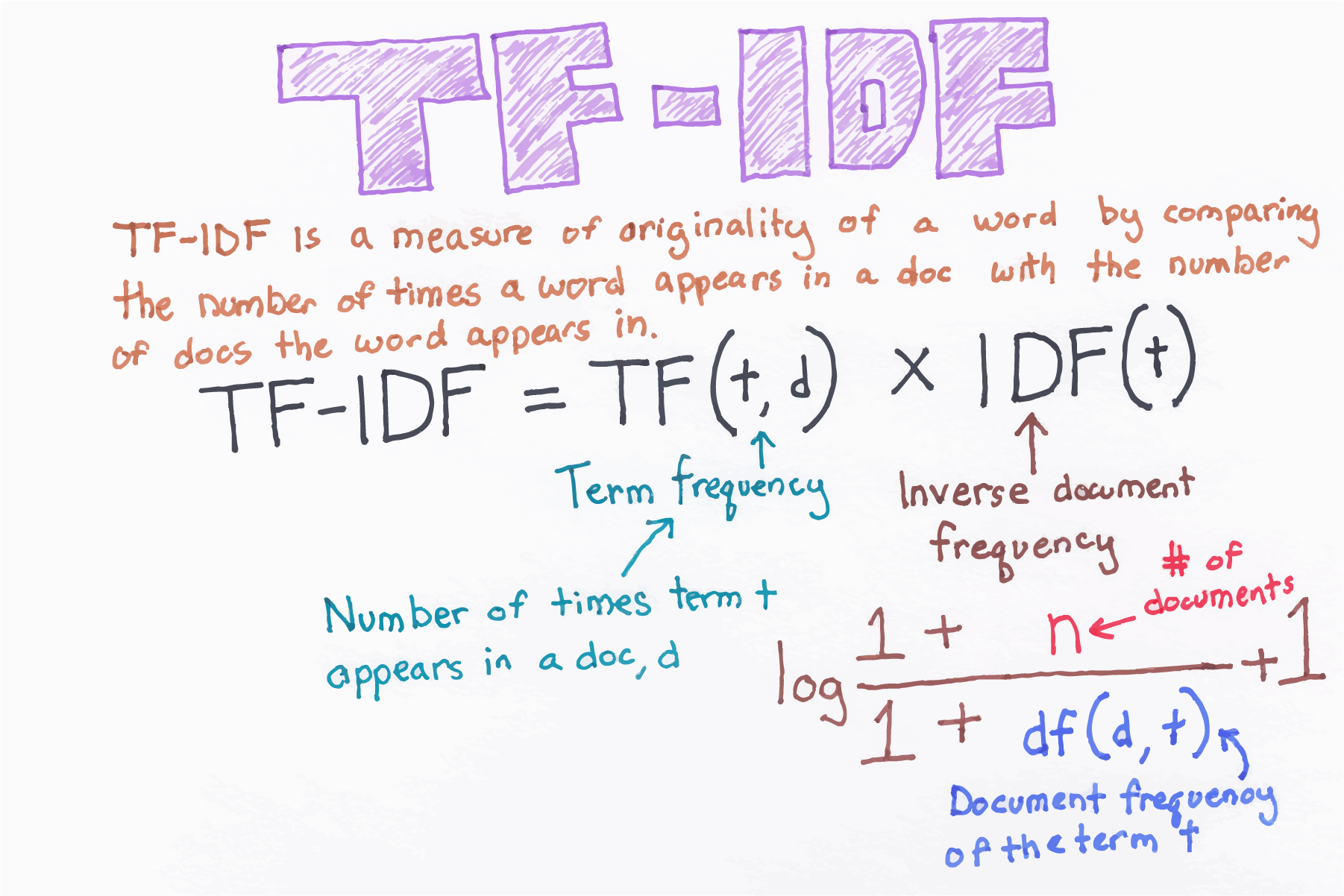

Term Frequency : This gives how often a given word appears within a document.

$\mathrm{TF}=\frac{\text { Number of times the term appears in the doc }}{\text { Total number of words in the doc }}$

Inverse Document Frequency: This gives how often the word appers across the documents. If a term is very common among documents (e.g., “the”, “a”, “is”), then we have low IDF score.

$\mathrm{IDF}=\ln \left(\frac{\text { Number of docs }}{\text { Number docs the term appears in }}\right)$

Term Frequency – Inverse Document Frequency TF-IDF: TF-IDF is the product of the TF and IDF scores of the term.

$\mathrm{TF}\mathrm{IDF}=\mathrm{TF} * \mathrm{IDF}$

In machine learning, TF-IDF is obtained from the class TfidfVectorizer.

It has following parameters:

min_df: remove the words from the vocabulary which have occurred in less than "min_df" number of files.max_df: remove the words from the vocabulary which have occurred in more than _{ maxdf" } total number of files in corpus.sublinear_tf: set to True to scale the term frequency in logarithmic scale.stop_words: remove the predefined stop words in 'english':use_idf: weight factor must use inverse document frequency.ngram_range: (1,2) to indicate that unigrams and bigrams will be considered.

NOTE:

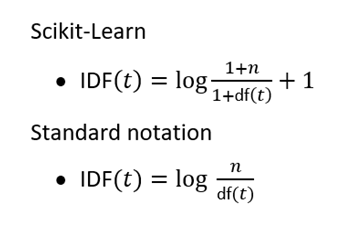

TFis same in sklearn and textbook butIDFif different (to address divide by zero problem)Ref: https://scikit-learn.org/stable/modules/feature_extraction.html#text-feature-extraction

Here,

df(t)is is the number of documents in the document set that contain term t in it.